Microsoft on virtualizing Exchange 2013:

Why virtualize?

• Server consolidation

• Homogenous infrastructure

• Deployment optimizations

• Management optimizations

• Monitoring optimizations

• Hardware utilization

• Reduced Capex

Why not?

• Added complexity

• Additional deployment steps

• Additional management layer

• Additional monitoring layer

• Performance impact

• Workload incompatibility/unsuportability

• Cost of hypervisor

Other considerations:

• Have you already virtualized everything else?

• Exchange 2013 virtualization is fully supported by Microsoft for Server Virtualization Validation Program participating vendors.

• Design for scale-out, not scale-up.

VMware on virtualizing Exchange 2013:

Logical design:

• Determine approach to virtualizing Exchange (how many servers, role placement, clustering, DAGs etc).

• Design Exchange based on number of users and user profile.

• Calculate CPU and memory requirements for all roles.

• Calculate storage requirements for Mailbox server(s).

• Design VM based on Exchange design and vSphere capabilities.

ESXi server architecture:

• Determine VM distribution across the cluster.

• Determine ESXi host specifications (consider scale up vs scale out (licensing), the SPECint_rate2006 benchmark from spec.org).

• Determine initial VM placement.

Supported vSphere features: vMotion DRS, Storage vMotion and vSphere HA.

Supported storage protocols for DAG: FC, iSCSI, FCoE.

CPU:

• Disable HT sharing in VM properties (MS says to disable HT, VMware says to leave it on).

• Number of vCPU =< number of pCPU cores of a NUMA node (for local memory access).

• Use multiple virtual sockets/CPUs and single virtual core in VMware.

• Over-allocating vCPUs can negatively impact performances (high CPU ready and co-stop).

Microsoft on CPU:

- If you have a physical deployment, turn off hyperthreading.

- If you have a virtual deployment, you can enable hyperthreading (best to follow the recommendation of your hypervisor vendor), and:

- Don’t allocate extra virtual CPUs to Exchange server guest VMs.

- Don’t use the extra logical CPUs exposed to the host for sizing/capacity calculations

Exchange 2013 supports a VP:LP ratio of no greater than 2:1, and a ratio of 1:1 is recommended.

When enabled, HT increases CPU throughput slightly (potentially 30%), but it also causes a dramatic increase of base memory utilization (potentially double).

Customers can use processors that support Second Level Address Translation (SLAT) technologies (that is, SLAT-based processors). SLAT technologies add a second level of paging functionality under the paging tables of x86/x64 processors. They provide an indirection layer that maps virtual machine memory addresses to physical memory addresses, which reduces load on the hypervisor for address translation.

SLAT technologies also help to reduce CPU and memory overhead, thereby allowing more virtual machines to be run concurrently on a single Hyper-V machine. The Intel SLAT technology is known as Extended Page Tables (EPT); the AMD SLAT technology is known as Rapid Virtualization Indexing (RVI), formerly Nested Paging Tables (NPT).

Some Intel multicore processors may use Intel Hyper-Threading Technology. When Hyper-Threading Technology is enabled, the actual number of cores that are used by Dynamic Virtual Machine Queue (D-VMQ) should be half the total number of logical processors that are available in the system. This is because D-VMQ spreads the processing across individual physical cores only, and it does not use hyper-threaded sibling cores.

VMware on CPU:

When designing for a virtualized Exchange implementation, sizing should be conducted with physical cores in mind. Although Microsoft supports a maximum virtual CPU to physical CPU overcommitment ratio of 2:1, the recommended practice is to keep this as close to 1:1 as possible.

Virtual machines, including those running Exchange 2013, should be configured with multiple virtual sockets/CPUs. Only use the Cores per Socket option if the guest operating system requires the change to make all vCPUs visible. Virtual machines using vNUMA might benefit from this option, but the recommendation for these virtual machines is generally to use virtual sockets (CPUs in the web client). Exchange 2013 is not a NUMA-aware application, and performance tests have not shown any significant performance improvements by enabling vNUMA.

Memory:

• vRAM =< pRAM of a NUMA node (for local memory access).

• If memory is oversubscribed, reserve the allocated memory (reservation may have negative impact on vMotion).

• The page file size min and max should be set to the operating system’s RAM + 10 MB.

Microsoft on memeory:

Crossing the NUMA boundary can reduce virtual performance by as much as 8 percent. Therefore, configure a virtual machine to use resources from a single NUMA node. For Exchange Server, make sure that allocated memory is equal to or smaller than a NUMA boundary.

By default, a virtual machine gets its preferred NUMA node every time it runs. However, in due course, an imbalance in the assignment of NUMA nodes to the virtual machines may occur. This may happen because each virtual machine has ad hoc memory requirements or because the virtual machines can be started in any order. Therefore, we recommend that you use Perfmon to check the NUMA node preference settings for each running virtual machine. The settings can be checked with the following: \Hyper-V VM Vid Partition (*)\ NumaNodeIndex counter.

Microsoft does not support Dynamic Memory for virtual machines that run any of the Exchange 2013 roles.

VMware on memory:

Do not overcommit memory on ESXi hosts running Exchange workloads. For production systems, it is possible to enforce this policy by setting a memory reservation to the configured size of the virtual machine. Setting memory reservations might limit vSphere vMotion. A virtual machine can only be migrated if the target ESXi host has free physical memory equal to or greater than the size of the reservation.

Non-Uniform Memory Access

In NUMA systems, a processor or set of processor cores have memory that they can access with very little latency. The memory and its associated processor or processor cores are referred to as a NUMA node. Operating systems and applications designed to be NUMA-aware can make decisions as to where a process might run, relative to the NUMA architecture. This allows processes to access memory local to the NUMA node rather than having to traverse an interconnect, incurring additional latency. Exchange 2013 is not NUMA-aware, but ESXi is.

vSphere ESXi provides mechanisms for letting virtual machines take advantage of NUMA. The first mechanism is transparently managed by ESXi while it schedules a virtual machine’s virtual CPUs on NUMA nodes. By attempting to keep all of a virtual machine’s virtual CPUs scheduled on a single NUMA node, memory access can remain local. For this to work effectively, the virtual machine should be sized to fit within a single NUMA node. This placement is not a guarantee because the scheduler migrates a virtual machine between NUMA nodes based on the demand.

The second mechanism for providing virtual machines with NUMA capabilities is vNUMA. When enabled for vNUMA, a virtual machine is presented with the NUMA architecture of the underlying hardware. This allows NUMA-aware operating systems and applications to make intelligent decisions based on the underlying host’s capabilities. vNUMA is enabled for virtual machines with nine or more vCPUs. Because Exchange 2013 is not NUMA aware, enabling vNUMA for an Exchange virtual machine does not provide any additional performance benefit.

Verify that all ESXi hosts have NUMA enabled in the system BIOS. In some systems NUMA is enabled by disabling node interleaving.

Storage:

Block size: 32 KB

DB read/write ratio: 3:2

I/O profile:

DB I/O: 32 KB random

Background DB maintenance: 256 KB sequential read

Log I/O: varies from 4 KB to 1 MB

• Provide at least 30 GB of space on the drive where you will install Exchange.

• Add an additional 500 MB of available disk space for each Unified Messaging (UM) language pack that you plan to install.

• Add 200 MB of available disk space on the system drive.

• Use a hard disk with at least 500 MB of free space to store the message queue database, which can be co-located on the system drive, assuming you have accurately planned for enough space and I/O throughput.

• Use multiple vSCSI adapters/controllers.

• Use eager zeroed thick VMDK or fixed-size VHDX disks.

• Format Windows NTFS volumes for databases and logs with an allocation unit size of 64 KB.

• Follow storage vendor recommendations for path policy.

• Use pre-production tools JetStress and Load Generator to evaluate the storage performance.

Sizing DBs for improved manageability:

• DAG replicated: up to 2 TB (to avoid reseeding issues).

• Non-DAG and not replicated: up to 200 GB (easer management and recovery).

• Consider backup and restore times when calculating the database size.

Microsoft on storage:

Using differencing disks and dynamically expanding disks is not supported in a virtualized Exchange 2013 environment.

Do not use hypervisor snapshots for the virtual machines in an Exchange 2013 production environment.

The virtual IDE controller must be used for booting the virtual machine; however, all other drives should be attached to the virtual SCSI controller. This ensures optimal performance, as well as the greatest flexibility. Each virtual machine has a single virtual SCSI controller by default, but three more can be added while the virtual machine is offline.

VMware on storage:

Verify if there are any benefits in using PVSCSI over LSI SAS.

HBA default queue depth (LSA 32 and PVSCSI 64) can be increased.

Install up to 4 vSCSI adapters and distribute data VMDKs evenly across these adapters.

Compared to VMDK, RDM does not provide significant performance improvements.

Virtual SCSI Adapters

VMware provides two commonly used virtual SCSI adapters for Windows Server 2008 R2 and Windows Server 2012, LSI Logic SAS and VMware Paravirtual SCSI (PVSCSI). The default adapter when creating new virtual machines with either of these two operating systems is LSI Logic SAS, and this adapter can satisfy the requirements of most workloads. The fact that it is the default and requires no additional drivers has made it the default vSCSI adapter for many organizations.

The Paravirtual SCSI adapter is a high-performance vSCSI adapter developed by VMware to provide optimal performance for virtualized business critical applications. The advantage of the PVSCSI adapter is that the added performance is delivered while minimizing the use of hypervisor CPU resources. This leads to less hypervisor overhead required to run storage I/O-intensive applications.

Exchange 2013 has greatly reduced the amount of I/O generated to access mailbox data, however storage latency is still a factor. In environments supporting thousands of users per Mailbox server, PVSCSI might prove beneficial. The decision on whether to use LSI Logic SAS or PVSCSI should be made based on Jetstress testing of the predicted workload using both adapters. Additionally, organizations must consider any management overhead an implementation of PVSCSI might introduce. Because many organizations have standardized on LSI Logic SAS, if the latency and throughput difference is negligible with the proposed configuration, the best option might be the one with the least impact to the current environment.

Virtual machines can be deployed with up to four virtual SCSI adapters. Each vSCSI adapter can accommodate up to 15 storage devices for a total of 60 storage devices per virtual machine. During allocation of storage devices, each device is assigned to a vSCSI adapter in sequential order. Not until a vSCSI adapter reaches its 15th device will a new vSCSI adapter be created. To provide better parallelism of storage I/O, equally distribute storage devices among the four available vSCSI adapters.

EMC on storage:

Both Thick and Thin LUNs can be used for Exchange storage (database and logs). Thick LUNs are recommended for heavy workloads with high IOPS user profiles. Thin LUNs are recommended for light to medium workloads with low IOPS user profiles.

Format Windows NTFS volumes for databases and logs with an allocation unit size of 64 KB.

Network:

• Use VMXNET3 or synthetic vNIC adapter.

• Turn on RSS for vNICs in Device Manager.

• Allocate at least 2 pNICs to Exchange.

Microsoft on network:

Synthetic adapters are the preferred option for most virtual machine configurations because they use a dedicated VMBus to communicate between the virtual NIC and the physical NIC. This results in reduced CPU cycles, as well as much lower hypervisor/guest transitions per operation. The driver for the synthetic adapter is included with the Integration Services that are installed with the Windows Server 2012 guest operating system. At minimum, customers should use the default synthetic vNIC to drive higher levels of performance. In addition, should the physical network card support them, the administrator should take advantage of a number of the NIC offloads that can further increase performance.

Single Root I/O Virtualization (SR-IOV) can provide the highest levels of networking performance for virtualized Exchange virtual machines. Check with your hardware vendor for support because there may be a BIOS and firmware update required to enable SR-IOV.

VMware on network:

For Exchange 2013 virtual machines participating in a database availability group (DAG), configure at least two virtual network interfaces, connected to different VLANs or networks. These interfaces provide access for Messaging Application Programming Interface (MAPI) and replication traffic.

Although Exchange 2013 DAG members can be deployed with a single network interface for both MAPI and replication traffic, Microsoft recommends using separate network interfaces for each traffic type. The use of at least two network interfaces allows DAG members to distinguish between system and network failures.

If Exchange servers using VMXNET3 experience packet loss during high-volume DAG replications, gradually increase the number of buffers in the guest operating system - follow VMware KBA 2039495.

Performances:

• Select the high performance power plan in Windows.

• Use affinity/antiaffinity rules (keep the in line servers e.g. CAS1 and MBX1 on the same host to avoid network traffic between these, and keep DAG members and/or multiple CAS servers on different hosts for high-availability).

• Set power policy to high-performance in vSphere.

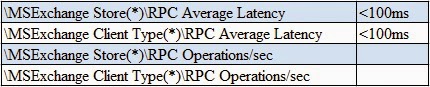

VMware Performance Counters for Exchange

Storage performance monitoring:

RPC average latency:

Watch for high query latencies on Exchange servers and high CPU utilization on Active Directory:

Allocate sufficient amount of RAM to AD so it can cache the entire database file.

For large deployments, research the issues related to NTLM auth and MaxConcurrentAPI.

Other recommendations:

Microsoft:

For an Exchange 2013 virtual machine, do not configure the virtual machine to save state. We recommend that you configure the virtual machine to use a shut down because it minimizes the chance that the virtual machine can be corrupted. When a shut down happens, all jobs that are running can finish, and there will be no synchronization issues when the virtual machine restarts (for example, a Mailbox role server within a DAG replicating to another DAG member).

Use the Failover Priority setting to ensure that, upon failover, the Exchange 2013 virtual machines start in advance of other, less important virtual machines. You can set the Failover Priority setting for the Exchange 2013 virtual machine to high. This ensures that even under contention, the Exchange 2013 virtual machine, upon failover, will successfully start and receive the resources it needs to perform at the desired levels—taking resources from other currently running virtual machines, if required.

When performing live migration of DAG members, follow these key points:

• If the server offline time exceeds five seconds, the DAG node will be evicted from the cluster. Ensure that the hypervisor and host-based clustering use the Live Migration technology in Hyper-V to help migrate resources with no perceived downtime.

• If you are raising the heartbeat timeout threshold, perform testing to ensure that migration succeeds within the configured timeout period.

• On the Live Migration network, enable jumbo frames on the network interface for each host. In addition, verify that the switch handling network traffic is configured to support jumbo frames.

• Deploy as much bandwidth as possible for the Live Migration network to ensure that the live migration completes as quickly as possible.

VMware:

Allowing two nodes from the same DAG to run on the same ESXi host for an extended period is not recommended when using symmetrical mailbox database distribution. DRS anti-affinity rules can be used to mitigate the risk of running active and passive mailbox databases on the same ESXi host.

During a vSphere vMotion operation, memory pages are copied from the source ESXi host to the destination. These pages are copied while the virtual machine is running. During the transition of the virtual machine from running on the source to the destination host, a very slight disruption in network connectivity might occur, typically characterized by a single dropped ping. In most cases this is not a concern, however in highly active environments, this disruption might be enough to trigger the cluster to evict the DAG node temporarily, causing database failover. To mitigate cluster node eviction, the cluster heartbeat interval can be adjusted.

The Windows Failover Cluster parameter samesubnetdelay can be modified to help mitigate database failovers during vSphere vMotion of DAG members. This parameter controls how often cluster heartbeat communication is transmitted. The default interval is 1000ms (1 second). The default threshold for missed packets is 5, after which the cluster service determines that the node has failed. Testing has shown that by increasing the transmission interval to 2000ms (2 seconds) and keeping the threshold at 5 intervals, vSphere vMotion migrations can be performed with reduced occurrences of database failover.

Microsoft recommends using a maximum value of 10 seconds for the cluster heartbeat timeout. In this configuration the maximum recommended value is used by configuring a heartbeat interval of 2 seconds (2000 milliseconds) and a threshold of 5 (default).

Database failover due to vSphere vMotion operations can be mitigated by using multiple dedicated vSphere vMotion network interfaces. In most cases, the interfaces that are used for vSphere vMotion are also used for management traffic. Because management traffic is relatively light, this does not add significant overhead.

Using jumbo frames reduces the processing overhead to provide the best possible performance by reducing the number of frames that must be generated and transmitted by the system. During testing, VMware had an opportunity to test vSphere vMotion migration of DAG nodes with and without jumbo frames enabled. Results showed that with jumbo frames enabled for all VMkernel ports and on the VMware vNetwork Distributed Switch, vSphere vMotion migrations of DAG member virtual machines completed successfully. During these migrations, no database failovers occurred, and there was no need to modify the cluster heartbeat setting.

Ask The Perf Guy: What’s The Story With Hyperthreading and Virtualization?

Best Practices for Virtualizing & Managing Exchange 2013

Large packet loss at the guest OS level on the VMXNET3 vNIC in ESXi 5.x / 4.x.

Microsoft Clustering on VMware vSphere: Guidelines for supported configurations

Microsoft Exchange Server (2010 & 2013) Best Practices and Design Guidelines for EMC Storage 1

Microsoft Exchange Server (2010 & 2013) Best Practices and Design Guidelines for EMC Storage 2

Microsoft Exchange 2013 on VMware Availability and Recovery Options

Microsoft Exchange 2013 on VMware Best Practices Guide

Microsoft Exchange 2013 on VMware Design and Sizing Guide

Microsoft Exchange Server 2013 Sizing

Monitoring and Tuning Microsoft Exchange Server 2013 Performance

Poor network performance or high network latency on Windows virtual machines

Processor Query Tool

The Standard Performance Evaluation Corporation

Virtual Receive-side Scaling in Windows Server 2012 R2

Virtualization in Microsoft Exchange Server 2013

VMWorld 2013: Successfully Virtualize Microsoft Exchange Server

.

Exchange

Thursday, 12 February 2015

Saturday, 7 February 2015

Sizing Exchange 2013 for capacity and performance

Exchange 2013 - compute resource calculator

Exchange 2013 - storage space capacity calculator

Exchange 2013 Server Role Requirements Calculator

Space requirements

Gather requirements and user data:

Requires 2 pieces of data:

• The average number of messages per user per day

• The average message size

These can be obtained by using:

• Performance monitor: MSExchangeIS\Messages Submitted/sec and MSExchangeIS\Messages Delivered/sec and transport performance counters

• The MessageStats script: User Profile Analysis for Exchange Server 2007/2010/2013

• SCOM reporting (Daily Mailflow Statistics)

Determine mailbox size:

Consider 3 factors: the mailbox storage quota, database white space, and recoverable items.

Estimated size of whitespace is 1 day worth of messaging content:

Number of messages per day x average message size

Deleted items retention at its default time frame (14 days) increases mailbox size for ~1.2%.

Calendar item version logging (on by default) increases mailbox size for ~3%.

In calculating Recoverable Items folder size, these are multiplied with mailbox quota:

mbx quota x 0.012 + mbx quota x 0.03

So, the total mailbox size is the sum of these 3 factors:

Mailbox Size on disk = mailbox quota + database whitespace + Recoverable Items Folder

Content indexing:

The space required for files related to the content indexing process can be estimated as 20% of the database size.

Per-Database Content Indexing Space = database size x 0.20

You must additionally size for one additional content index (e.g. an additional 20% of one of the mailbox databases on the volume) in order to allow content indexing maintenance tasks (specifically the master merge process) to complete.

So, I simply increased the total content indexing to 30% of the total database space:

Total Content Indexing Space = total DBs space requirement x 0.30

Log space:

The value for Number of transaction logs generated per day is based on the message profile selected and the average message size. It indicates how many transaction logs will be generated per mailbox per day. The log generation numbers per message profile account for:

• Message size impact

• Amount of data sent/received

• Database health maintenance operations

• Records Management operations

• Data stored in a mailbox that is not a message (tasks, calendar appointments, contacts)

• Forced log rollover (a mechanism that periodically closes the current transaction log file)

• If the average message size doubles to 150 KB, the logs generated per mailbox increases by a factor of 1.9. This number represents the percentage of the database that contains the attachments and message tables (message bodies and attachments).

• Thereafter, as message size doubles beyond 150 KB, the log generation rate per mailbox also doubles, increasing from 1.9 to 3.8.

In addition to this, the log space requirement factors in 7 days of log truncation failure (it’s assumed that a full DB backup runs daily and truncates logs regularly, so this space provides capacity for a week of logs in case the backup keeps failing for 7 days) and logs generated by moving 1% of all mailboxes per week.

Log capacity to support 7 days of truncation failure = (number of mailboxes x number of logs/day) x 7 days

Log capacity to support 1% mailbox moves per week = number of mailboxes x 0.01 x mailbox size

So, the total log capacity is calculated as:

Total local capacity required = Log capacity to support 7 days of truncation failure + Log capacity to support 1% mailbox moves per week

Compute requirements

Calculate IOPS requirements

Storage bandwidth requirements

While IOPS requirements are usually the primary storage throughput concern when designing an Exchange solution, it is possible to run up against bandwidth limitations with various types of storage subsystems. The IOPS sizing guidance above is looking specifically at transactional (somewhat random) IOPS and is ignoring the sequential IO portion of the workload. One place that sequential IO becomes a concern is with storage solutions that are running a large amount of sequential IO through a common channel. A common example of this type of load is the ongoing background database maintenance (BDM) which runs continuously on Exchange mailbox databases. While this BDM workload might not be significant for a few databases stored on a JBOD drive, it may become a concern if all of the mailbox database volumes are presented through a common iSCSI or Fibre Channel interface. In that case, the bandwidth of that common channel must be considered to ensure that the solution doesn’t bottleneck due to these IO patterns.

In Exchange 2013, we expect to consume approximately 1MB/sec/database copy for BDM which is a significant reduction from Exchange 2010.

Transport storage requirements

Transport storage capacity is driven by two needs: queuing (including shadow queuing) and Safety Net (which is the replacement for transport dumpster in this release). You can think of the transport storage capacity requirement as the sum of message content on disk in a worst-case scenario, consisting of three elements:

• The current day’s message traffic, along with messages which exist on disk longer than normal expiration settings (like poison queue messages).

• Queued messages waiting for delivery.

• Messages persisted in Safety Net in case they are required for redelivery.

To determine the maximum size of the transport database, we can look at the entire system as a unit and then come up with a per-server value.

Overall Daily Messages Traffic = number of users x message profile

Overall Transport DB Size = average message size x overall daily message traffic x (1 + (percentage of messages queued x maximum queue days) + Safety Net hold days) x 2 copies for high availability

Microsoft’s best practices guidance for the transport database is to leave it in the Exchange install path (likely on the OS drive) and ensure that the drive supporting that directory path is using a protected write cache disk controller, set to 100% write cache if the controller allows optimization of read/write cache settings. The write cache allows transport database log IO to become effectively “free” and allows transport to handle a much higher level of throughput.

Processor requirements

CPU sizing for the Mailbox role is done in terms of megacycles. A megacycle is a unit of processing work equal to one million CPU cycles. In very simplistic terms, you could think of a 1 MHz CPU performing a megacycle of work every second. Given the guidance provided below for megacycles required for active and passive users at peak, you can estimate the required processor configuration to meet the demands of an Exchange workload. Following are our recommendations on the estimated required megacycles for the various user profiles.

The internal architecture of modern processors is different enough between manufacturers as well as within product lines of a single manufacturer that it requires an additional normalization step to determine the available processing power for a particular CPU. We recommend using the SPECint_rate2006 benchmark from the Standard Performance Evaluation Corporation.

Keep in mind that a good Exchange design should never plan to run servers at 100% of CPU capacity. In general, 80% CPU utilization in a failure scenario is a reasonable target for most customers. Given that caveat that the high CPU utilization occurs during a failure scenario, this means that servers in a highly available Exchange solution will often run with relatively low CPU utilization during normal operation. Additionally, there may be very good reasons to target a lower CPU utilization as maximum, particularly in cases where unanticipated spikes in load may result in acute capacity issues.

Memory requirements

Recommended amount of memory for the Mailbox role on a per mailbox basis.

To determine the amount of memory that should be provisioned on a server, take the number of active mailboxes per-server in a worst-case failure and multiply by the value associated with the expected user profile. From there, round up to a value that makes sense from a purchasing perspective (i.e. it may be cheaper to configure 128GB of RAM compared to a smaller amount of RAM depending on slot options and memory module costs).

Mailbox Memory per-server = (worst-case active database copies per-server x users per-database x memory per-active mailbox)

It’s important to note that the content indexing technology included with Exchange 2013 uses a relatively large amount of memory to allow both indexing and query processing to occur very quickly. This memory usage scales with the number of items indexed, meaning that as the number of total items stored on a Mailbox role server increases (for both active and passive copies), memory requirements for the content indexing processes will increase as well. In general, the guidance on memory sizing presented here assumes approximately 15% of the memory on the system will be available for the content indexing processes which means that with a 75KB average message size, we can accommodate mailbox sizes of 3GB at 50 message profile up to 32GB at the 500 message profile without adjusting the memory sizing. If your deployment will have an extremely small average message size or an extremely large average mailbox size, you may need to add additional memory to accommodate the content indexing processes.

Multi-role server deployments will have an additional memory requirement beyond the amounts specified above. CAS memory is computed as a base memory requirement for the CAS components (2GB) plus additional memory that scales based on the expected workload. This overall CAS memory requirement on a multi-role server can be computed using the following formula:

CAS Memory per-server = 2 GB + ((2 GB x ((max number of users x megacycles per user) x 0375) / per-core megacycles))

Regardless of whether you are considering a multi-role or a split-role deployment, it is important to ensure that each server has a minimum amount of memory for efficient use of the database cache. There are some scenarios that will produce a relatively small memory requirement from the memory calculations described above. We recommend comparing the per-server memory requirement you have calculated with the following table to ensure you meet the minimum database cache requirements. The guidance is based on total database copies per-server (both active and passive). If the value shown in this table is higher than your calculated per-server memory requirement, adjust your per-server memory requirement to meet the minimum listed in the table.

Unified messaging

With the new architecture of Exchange, Unified Messaging is now installed and ready to be used on every Mailbox and CAS. The CPU and memory guidance provided here assumes some moderate UM utilization. In a deployment with significant UM utilization with very high call concurrency, additional sizing may need to be performed to provide the best possible user experience. As in Exchange 2010, we recommend using a 100 concurrent call per-server limit as the maximum possible UM concurrency, and scale out the deployment if the sizing of your deployment becomes bound on this limit. Additionally, voicemail transcription is a very CPU-intensive operation, and by design will only transcribe messages when there is enough available CPU on the machine. Each voicemail message requires 1 CPU core for the duration of the transcription operation, and if that amount of CPU cannot be obtained, transcription will be skipped. In deployments that anticipate a high amount of voicemail transcription concurrency, server configurations may need to be adjusted to increase CPU resources, or the number of users per server may need to be scaled back to allow for more available CPU for voicemail transcription operations.

Active Directory capacity for Exchange 2013

The recommendation is a ratio of 1 Active Directory global catalog processor core for every 8 Mailbox role processor cores handling active load.

AD cores requirements = ((mailbox users x megacycles per active user) / megacycles per core) / 8

Ask the Perf Guy: Sizing Exchange 2013 Deployments

.

Exchange 2013 - storage space capacity calculator

Exchange 2013 Server Role Requirements Calculator

Space requirements

Gather requirements and user data:

Requires 2 pieces of data:

• The average number of messages per user per day

• The average message size

These can be obtained by using:

• Performance monitor: MSExchangeIS\Messages Submitted/sec and MSExchangeIS\Messages Delivered/sec and transport performance counters

• The MessageStats script: User Profile Analysis for Exchange Server 2007/2010/2013

• SCOM reporting (Daily Mailflow Statistics)

Determine mailbox size:

Consider 3 factors: the mailbox storage quota, database white space, and recoverable items.

Estimated size of whitespace is 1 day worth of messaging content:

Number of messages per day x average message size

Deleted items retention at its default time frame (14 days) increases mailbox size for ~1.2%.

Calendar item version logging (on by default) increases mailbox size for ~3%.

In calculating Recoverable Items folder size, these are multiplied with mailbox quota:

mbx quota x 0.012 + mbx quota x 0.03

So, the total mailbox size is the sum of these 3 factors:

Mailbox Size on disk = mailbox quota + database whitespace + Recoverable Items Folder

Content indexing:

The space required for files related to the content indexing process can be estimated as 20% of the database size.

Per-Database Content Indexing Space = database size x 0.20

You must additionally size for one additional content index (e.g. an additional 20% of one of the mailbox databases on the volume) in order to allow content indexing maintenance tasks (specifically the master merge process) to complete.

So, I simply increased the total content indexing to 30% of the total database space:

Total Content Indexing Space = total DBs space requirement x 0.30

Log space:

The value for Number of transaction logs generated per day is based on the message profile selected and the average message size. It indicates how many transaction logs will be generated per mailbox per day. The log generation numbers per message profile account for:

• Message size impact

• Amount of data sent/received

• Database health maintenance operations

• Records Management operations

• Data stored in a mailbox that is not a message (tasks, calendar appointments, contacts)

• Forced log rollover (a mechanism that periodically closes the current transaction log file)

Message

profile (75 KB average message size

|

Number of

transaction logs generated per day

|

50

|

10

|

100

|

20

|

150

|

30

|

200

|

40

|

• If the average message size doubles to 150 KB, the logs generated per mailbox increases by a factor of 1.9. This number represents the percentage of the database that contains the attachments and message tables (message bodies and attachments).

• Thereafter, as message size doubles beyond 150 KB, the log generation rate per mailbox also doubles, increasing from 1.9 to 3.8.

In addition to this, the log space requirement factors in 7 days of log truncation failure (it’s assumed that a full DB backup runs daily and truncates logs regularly, so this space provides capacity for a week of logs in case the backup keeps failing for 7 days) and logs generated by moving 1% of all mailboxes per week.

Log capacity to support 7 days of truncation failure = (number of mailboxes x number of logs/day) x 7 days

Log capacity to support 1% mailbox moves per week = number of mailboxes x 0.01 x mailbox size

So, the total log capacity is calculated as:

Total local capacity required = Log capacity to support 7 days of truncation failure + Log capacity to support 1% mailbox moves per week

Compute requirements

Calculate IOPS requirements

|

Estimated IOPS per

mailbox

|

||

50

|

0.034

|

||

100

|

0.067

|

||

150

|

0.101

|

||

200

|

0.134

|

||

250

|

0.168

|

||

300

|

0.201

|

||

350

|

0.235

|

||

400

|

0.268

|

||

450

|

0.302

|

||

500

|

0.335

|

Storage bandwidth requirements

While IOPS requirements are usually the primary storage throughput concern when designing an Exchange solution, it is possible to run up against bandwidth limitations with various types of storage subsystems. The IOPS sizing guidance above is looking specifically at transactional (somewhat random) IOPS and is ignoring the sequential IO portion of the workload. One place that sequential IO becomes a concern is with storage solutions that are running a large amount of sequential IO through a common channel. A common example of this type of load is the ongoing background database maintenance (BDM) which runs continuously on Exchange mailbox databases. While this BDM workload might not be significant for a few databases stored on a JBOD drive, it may become a concern if all of the mailbox database volumes are presented through a common iSCSI or Fibre Channel interface. In that case, the bandwidth of that common channel must be considered to ensure that the solution doesn’t bottleneck due to these IO patterns.

In Exchange 2013, we expect to consume approximately 1MB/sec/database copy for BDM which is a significant reduction from Exchange 2010.

Transport storage requirements

Transport storage capacity is driven by two needs: queuing (including shadow queuing) and Safety Net (which is the replacement for transport dumpster in this release). You can think of the transport storage capacity requirement as the sum of message content on disk in a worst-case scenario, consisting of three elements:

• The current day’s message traffic, along with messages which exist on disk longer than normal expiration settings (like poison queue messages).

• Queued messages waiting for delivery.

• Messages persisted in Safety Net in case they are required for redelivery.

To determine the maximum size of the transport database, we can look at the entire system as a unit and then come up with a per-server value.

Overall Daily Messages Traffic = number of users x message profile

Overall Transport DB Size = average message size x overall daily message traffic x (1 + (percentage of messages queued x maximum queue days) + Safety Net hold days) x 2 copies for high availability

Microsoft’s best practices guidance for the transport database is to leave it in the Exchange install path (likely on the OS drive) and ensure that the drive supporting that directory path is using a protected write cache disk controller, set to 100% write cache if the controller allows optimization of read/write cache settings. The write cache allows transport database log IO to become effectively “free” and allows transport to handle a much higher level of throughput.

Processor requirements

CPU sizing for the Mailbox role is done in terms of megacycles. A megacycle is a unit of processing work equal to one million CPU cycles. In very simplistic terms, you could think of a 1 MHz CPU performing a megacycle of work every second. Given the guidance provided below for megacycles required for active and passive users at peak, you can estimate the required processor configuration to meet the demands of an Exchange workload. Following are our recommendations on the estimated required megacycles for the various user profiles.

|

Mcycles

per User, Active DB Copy or Standalone (MBX only)

|

Mcycles

per User, Active DB Copy or Standalone (Multi-Role)

|

Mcycles

per User, Passive DB Copy

|

||||

50

|

2.13

|

2.93

|

0.69

|

||||

100

|

4.25

|

5.84

|

1.37

|

||||

150

|

6.38

|

8.77

|

2.06

|

||||

200

|

8.50

|

11.69

|

2.74

|

||||

250

|

10.63

|

14.62

|

3.43

|

||||

300

|

12.75

|

17.53

|

4.11

|

||||

350

|

14.88

|

20.46

|

4.80

|

||||

400

|

17.00

|

23.38

|

5.48

|

||||

450

|

19.13

|

26.30

|

6.17

|

||||

500

|

21.25

|

29.22

|

6.85

|

The internal architecture of modern processors is different enough between manufacturers as well as within product lines of a single manufacturer that it requires an additional normalization step to determine the available processing power for a particular CPU. We recommend using the SPECint_rate2006 benchmark from the Standard Performance Evaluation Corporation.

Keep in mind that a good Exchange design should never plan to run servers at 100% of CPU capacity. In general, 80% CPU utilization in a failure scenario is a reasonable target for most customers. Given that caveat that the high CPU utilization occurs during a failure scenario, this means that servers in a highly available Exchange solution will often run with relatively low CPU utilization during normal operation. Additionally, there may be very good reasons to target a lower CPU utilization as maximum, particularly in cases where unanticipated spikes in load may result in acute capacity issues.

Memory requirements

Recommended amount of memory for the Mailbox role on a per mailbox basis.

Messages

sent or received per mailbox per day

|

Mailbox

role memory per active mailbox (MB)

|

50

|

12

|

100

|

24

|

150

|

36

|

200

|

48

|

250

|

60

|

300

|

72

|

350

|

84

|

400

|

96

|

450

|

108

|

500

|

120

|

To determine the amount of memory that should be provisioned on a server, take the number of active mailboxes per-server in a worst-case failure and multiply by the value associated with the expected user profile. From there, round up to a value that makes sense from a purchasing perspective (i.e. it may be cheaper to configure 128GB of RAM compared to a smaller amount of RAM depending on slot options and memory module costs).

Mailbox Memory per-server = (worst-case active database copies per-server x users per-database x memory per-active mailbox)

It’s important to note that the content indexing technology included with Exchange 2013 uses a relatively large amount of memory to allow both indexing and query processing to occur very quickly. This memory usage scales with the number of items indexed, meaning that as the number of total items stored on a Mailbox role server increases (for both active and passive copies), memory requirements for the content indexing processes will increase as well. In general, the guidance on memory sizing presented here assumes approximately 15% of the memory on the system will be available for the content indexing processes which means that with a 75KB average message size, we can accommodate mailbox sizes of 3GB at 50 message profile up to 32GB at the 500 message profile without adjusting the memory sizing. If your deployment will have an extremely small average message size or an extremely large average mailbox size, you may need to add additional memory to accommodate the content indexing processes.

Multi-role server deployments will have an additional memory requirement beyond the amounts specified above. CAS memory is computed as a base memory requirement for the CAS components (2GB) plus additional memory that scales based on the expected workload. This overall CAS memory requirement on a multi-role server can be computed using the following formula:

CAS Memory per-server = 2 GB + ((2 GB x ((max number of users x megacycles per user) x 0375) / per-core megacycles))

Regardless of whether you are considering a multi-role or a split-role deployment, it is important to ensure that each server has a minimum amount of memory for efficient use of the database cache. There are some scenarios that will produce a relatively small memory requirement from the memory calculations described above. We recommend comparing the per-server memory requirement you have calculated with the following table to ensure you meet the minimum database cache requirements. The guidance is based on total database copies per-server (both active and passive). If the value shown in this table is higher than your calculated per-server memory requirement, adjust your per-server memory requirement to meet the minimum listed in the table.

Per-Server

DB Copies

|

Minimum

Physical Memory (GB)

|

1-10

|

8

|

11-20

|

10

|

21-30

|

12

|

31-40

|

14

|

41-50

|

16

|

Unified messaging

With the new architecture of Exchange, Unified Messaging is now installed and ready to be used on every Mailbox and CAS. The CPU and memory guidance provided here assumes some moderate UM utilization. In a deployment with significant UM utilization with very high call concurrency, additional sizing may need to be performed to provide the best possible user experience. As in Exchange 2010, we recommend using a 100 concurrent call per-server limit as the maximum possible UM concurrency, and scale out the deployment if the sizing of your deployment becomes bound on this limit. Additionally, voicemail transcription is a very CPU-intensive operation, and by design will only transcribe messages when there is enough available CPU on the machine. Each voicemail message requires 1 CPU core for the duration of the transcription operation, and if that amount of CPU cannot be obtained, transcription will be skipped. In deployments that anticipate a high amount of voicemail transcription concurrency, server configurations may need to be adjusted to increase CPU resources, or the number of users per server may need to be scaled back to allow for more available CPU for voicemail transcription operations.

Active Directory capacity for Exchange 2013

The recommendation is a ratio of 1 Active Directory global catalog processor core for every 8 Mailbox role processor cores handling active load.

AD cores requirements = ((mailbox users x megacycles per active user) / megacycles per core) / 8

Ask the Perf Guy: Sizing Exchange 2013 Deployments

.

Subscribe to:

Posts (Atom)